Key takeaways:

- AI’s unprecedented economic impact and adoption: Antonio examines how AI is transforming every sector, as governments and businesses invest billions in generative AI infrastructure and applications.

- The new AI billionaire class and corporate transformation: With 500 AI companies now valued at over $1 billion, he explores how AI is driving unprecedented business disruption, with PwC predicting 92 million jobs disappearing while 170 million new roles emerge.

- Responsible corporate AI adoption and emerging risks: There remain critical gaps in AI implementation, raising urgent questions about hallucination, bias, cybersecurity, ethics, and human rights due diligence in corporate deployment.

- AI transforming access to justice: AI could save lawyers up to five hours per week as the global legal tech market grows to $64 billion by 2032. But UNESCO data shows only 9% of judicial operators have received adequate training, amplifying risks around bias, data security, and sentencing decisions.

- Philanthropy’s critical role amid aid collapse: With a $4.2 trillion funding gap threatening UN Sustainable Development Goals, can private foundations, corporations and philanthropists must fill the vacuum left by government cuts across healthcare, climate resilience, journalism and democratic defence?

Full transcript

Good morning, everyone, and welcome back to Trust Conference.

What a great day we had yesterday. Powerful topics, excellent speakers, and great energy all round. And if you’re joining us for the first time, you are very welcome. Thank you for being part of this unique community. You are in good company.

Across the room, you’ll find business, legal, media and civil society leaders working to defend free, fair and informed societies. That is the mission of the Thomson Reuters Foundation and the reason why we continue to make Trust Conference free to attend.

By bringing together our beneficiaries, our partners and those who share our mission, we hope to foster collective leadership, so that we – as a community – can be actively engaged in addressing some of the most critical issues of our time. Our goal is that the exchange of expertise that takes place here at Trust Conference, will allow us to shape the key decisions that will affect our tomorrow.

The information crisis

As we discussed yesterday, we are at an inflection point. Democracy is under assault. Autocracies are on the rise. They are being fuelled by a tidal wave of nationalism, populism, and by the acceleration of AI. The old world-order is fragmenting, and new hubs of power are emerging. Meanwhile, democratic institutions and fundamental civil rights are being questioned and quashed.

Yesterday, we focused on the impact that this is having on the information ecosystem and the rule of law. Access to independent news and access to the law – two critical tenets of democracy – are under attack. This is part of an authoritarian playbook that is being deployed around the world.

We heard from journalists, civil society leaders and even legal professionals, who experienced first-hand the abuse of the legal system in established democracies that are now turning into autocracies. We also heard from world-leading experts, who helped us dissect the new power dynamic at play between tech leaders and autocrats.

AI’s transformative power

Today, we’ll look even further at the way in which technology is redefining power. The race to predict, control and harness the potential of AI, is on. We are only just beginning to understand what’s at stake, in the context of business, geopolitics, the rule of law, and society.

These are all themes that we’ll explore today, examining how new technologies can bolster, rather than undermine, our efforts to shore up democracy. Is Artificial Intelligence the ultimate public interest tool? Can we build an AI infrastructure rooted in trust, safety, accountability, transparency and international standards?

And so, today, we’ll be looking at issues such as AI governance, but also at the wider impact of AI adoption in newsrooms, courts, across business and government, trying to highlight both the risks, but also the huge opportunities that this technology can bring to society.

Artificial Intelligence is already impacting every aspect of our lives – from business to healthcare, from agriculture to finance. This past year alone, the innovations we’ve seen are mind-boggling. Just think of the AI software that can examine the brain scans of stroke patients twice as accurately as professionals. Or the AI models that can detect diseases before the patient even displays symptoms. Or consider the fast-growing climate-tech sector, where AI is being used to reduce carbon emissions, create renewable energy technologies, and build climate resilience by using predictive analysis.

The amount of investment underpinning this technological transformation is unprecedented. In the past year, both governments and business have made significant investments in AI, with a particular focus on generative AI, tech infrastructure, and specialised applications. The United States has solidified its position as the global tech leader, investing more resources than China and the EU combined. Other countries are also witnessing record-breaking tech expansion.

The UK – for example – is now the third-largest AI market in the world, with an AI ecosystem valued at $92 billion, the biggest in Europe. And just last month, the UK secured £150 billion worth of tech investment from US companies, including Microsoft and Google. This is expected to create almost 8,000 jobs.

The UK Government is investing in AI to improve public services. A recent trial found that AI is helping developers across 50 government departments, allowing them to write code faster. This level of efficiency has the potential to unlock up to £45 billion in taxpayer savings.

The European Union is adopting a dual strategy, focusing on both regulation and investment to foster a unique “human-centric and trustworthy” AI ecosystem. Across the EU, private investment, particularly in generative AI, still lags behind the US and China, but the bloc is making concerted efforts to bridge this gap through a mix of policy and investment. These include the Invest AI Initiative, which aims to mobilise up to 200 billion euros.

Compared to two years ago, when we started talking about AI on this stage, the applications are now many and affect every single industry. Everyone in this room will have been exposed to agentic AI – perhaps even without knowing it. And that’s because, when it comes to reasoning, the capabilities of AI are literally taking off.

Consider this. When Open AI launched Chat GPT in 2022, the system could easily pass secondary school-level exams, but it struggled with broader reasoning. Today, AI models have the cognitive level of university graduates. GPT4 can answer 90% of the questions on the US Medical Licensing Exam correctly. And its latest iteration, GPT 5, offers PhD level expertise in areas such as coding.

Today, around the world, Agentic AI bots are helping millions of people by answering customer inquiries, drafting marketing emails in different languages, and even reading the news. ChatGPT is currently used by 700 million people a week. And its CEO, Sam Altman, is confident that soon, ChatGPT will have more conversations per day than all human beings combined.

Thanks to billions of dollars of investment, we have seen huge advances in AI applications that are now able to perform specific tasks faster, more accurately and using less energy. As a result, AI startups are now responsible for creating more billionaires than at any other time in history.

There are currently 500 AI private companies worth $1 billion, or more. A hundred of those were founded in the last two years alone. And so it’s no surprise that the tech sector is now the wealthiest industry on the Forbes Billionaires list. It’s worth a staggering $3.2 trillion.

Translated into practical terms, this means that we are witnessing the rise of a new group of very powerful individuals with a high level of influence. And what these new billionaires decide to do with this influence is a decision that rests entirely with them, as private citizens. What we can safely assume, though, is that their influence will only grow.

Goldman Sachs predicts AI will boost global GDP by $7 trillion over the next 10 years. McKinsey is even more bullish: it estimates that growth will be in the region of $17 trillion to $25.6 trillion per year.

This is a significant discrepancy, testament to the huge number of unknowns. Unknowns in terms of the capabilities of this new technology. But also, in terms of how prepared businesses and governments are to adapt to the changes that AI is ushering in. The biggest unknown, though, is related to risk.

Yesterday, we touched on some of the risks introduced by AI, as it intersects with independent media and civil society. We looked at issues such as disinformation, data privacy, copyright, alongside product development, talent drain and surveillance.

Today, we’ll be examining AI deployment through a different lens. We’ll be focusing on how AI is impacting responsible business practices and access to justice – both core pillars of our work at the Thomson Reuters Foundation.

PwC believes the pressure for businesses to radically transform themselves is at the highest levels seen in the last 25 years. It says a staggering $7.1 trillion in revenues is about to shift between companies, this year alone. As so all over the world, CEOs are rushing to adopt AI in order to keep their businesses competitive.

There are concerns around job losses: the Future of Jobs report produced by the World Economic Forum, for example, predicts that 92 million jobs are going to be gone in the next five years. The same report also suggests that 170 million new roles will emerge. But the reality is that these numbers hide a multitude of complexities.

Jobs won’t be like-for-like swaps in the same companies, in the same geographies, or even in the same sectors. In just three years’ time, for example, it’s predicted that 75% of software engineers will use AI coding assistants. And we can already see how industries where processes can easily be automated are heavily leveraging machine learning to maximise efficiency.

IBM, for example, is using AI tools for customer service, saving approximately 24% of its costs. In the past three months, Accenture has cut 11,000 jobs and said staff who can’t be retrained to use AI will be exited. Of course, not every business is moving at the same pace and with the same strategic clarity.

The Future of Professionals report, produced annually by Thomson Reuters, offers some interesting insights. The study is based on the views of more than 2,000 professionals and C-suite executives from over 50 countries. This year, it found that nearly half of organisations have invested in new AI-powered technology, and that 30% of professionals are using AI regularly. But critically, only 22% of them have an AI strategy.

Responsible AI adoption

And that’s why the conversation around corporate AI Adoption is so critical. Because the way in which businesses will deploy AI technology will have systemic consequences, for their shareholders and their stakeholders. Think about it. These technologies cross geographies, span global value chains, and have capabilities that are constantly accelerating.

So, how can we ensure that corporate AI adoption does no harm? How will it affect workers, global supply chains and the environment? And how long will it take for investors to demand answers about the materiality of AI-related risks? Is a global framework for public interest AI even possible, when ownership and know-how is in the hands of a small number of private companies? And how can AI play a role in accelerating more equitable access to justice? These are some of the questions we’ll attempt to answer today.

We’ll be joined by a dear friend, Vilas Dhar, President of the Patrick J. McGovern Foundation. Vilas is a leading voice in global AI governance, and I am delighted that he’s here with us today. Vilas has a background spanning human rights, engineering, and public policy. He was appointed by UN Secretary-General António Guterres to the UN High-Level Advisory Body on Artificial Intelligence.

Our two organisations have been working together for a number of years now, to support media leaders, tech journalists and civil society organisations working at the forefront of AI ethics, data and digital rights.

Our work with journalists will continue to be featured on today’s programme. Specifically, we’ll examine the ‘digital divide’ affecting the media industry. In an era when the sector is facing shrinking margins and increased costs, the advent of GenAI has introduced new critical challenges to grapple with.

We’ll look at why AI adoption in the newsroom is seen by some as a double edge sword. A survey we published earlier this year shows that over 80% of journalists are using AI for a wide range of tasks. But half of them admitted to being completely self-taught. They told us they lack policies, tools and training.

Today, we’ll be hearing directly from those journalists who are at the forefront of AI adoption. What are their learnings? And what can they recommend to other newsrooms who are just starting their AI journey?

Later in the day, we’ll be honing-in on corporate AI implementation. Until now, the discourse around AI-related risks has been dominated by its development, rather than its adoption. But the fast pace at which business is embracing this new technology raises fundamental questions around integrity, security, ethics and unintended consequences.

Currently, 78% of the world’s companies are integrating AI into their business, with nearly half of them using AI tools in at least three business functions. In this race towards adoption, business leaders are being offered a wide range of tools. And companies of all sizes are scrambling to integrate AI across their operations and services.

They want to move fast, as they fear they’ll lose margins to more agile competitors. But this frenetic adoption comes with huge risks attached. For example, it’s often impossible to fully know which data sets AI tools have been trained on, with obvious consequences when it comes to issues such as bias, cyber-security and ethics. Then, of course, there’s the issue of accuracy.

A recent study produced by the Georgia Institute of Technology in partnership with Open AI suggests that LLMs hallucinate because they have been trained to do so. In other words, the systems are trained to reward guessing rather than acknowledging that they are not able to provide the answer.

The risks are immense, particularly in terms of compliance, reputation, profitability, security, and human rights due diligence. And so companies are spending millions of dollars on fully exploring the wider implications of their AI roadmap. To unlock the full potential of AI, we need to understand how it’s being used. And we need to support leaders who want to adopt this technology responsibly and ethically.

And that is why, last year, on this very stage, the Thomson Reuters Foundation partnered with UNESCO to launch the AI Company Data Initiative (AICDI). AICDI is a voluntary survey designed to help organisations map their AI use so that they can understand and mitigate the risks they might face. We’ll be releasing the findings from our first survey early next year. You can find more about our AICDI initiative in the Thomson Reuters Foundation exhibition area.

This afternoon, we’ll feature real life examples of corporate AI adoption, and we’ll examine the benefits of voluntary disclosure.

We’ll continue the day by turning our focus to the impact of AI on access to justice, a fundamental right to any functioning democracy. AI is radically transforming the legal profession. According to Thomson Reuters – within the next year – AI could save lawyers up to five hours per week. This could unlock an average of $19,000 in billable hours per lawyer. In the U.S. alone, that’s approximately $32 billion in combined efficiencies for the sector.

The global legal technology market is projected to grow from $34 billion this year, to almost 64 in the next seven years. The trend seems unstoppable. This year, we witnessed the launch of the world’s first AI law firm, which offers purely AI-driven services. Garfield.law promises to be ‘better, quicker and more affordable’.

Another example of innovation is Justice Text. The tool promises to free up time and resources for public defenders by using AI to scan hours of material, including camera footage and interrogation videos. When it comes to access to justice, generative AI can be a significant equaliser. It has the potential to boost efficiency by automating routine tasks, reduce costs, and provide more accessible information and support.

Earlier this year, a survey published by UNESCO found that 44% of people working in the legal and the judicial system are already using AI tools. But only 9% of them have received adequate training. This, of course, raises serious legal and ethical concerns, from data security to the quality of the underlying data training the systems, raising questions about entrenched bias, and inaccuracies that might significantly affect decision-making.

And with AI being used not just to summarise huge quantities of data, but also for sentencing, our panel this afternoon will look at how human rights protections can be embedded in the AI tools deployed across the justice system.

Finally, we’ll come full circle with our last panel of the day, examining the role of philanthropy at this critical moment in time. The world faces a $4.2 trillion funding gap to achieve the UN Sustainable Development Goals. Without investment, and with rising geopolitical tensions, it’s estimated that around 600 million people will be living in poverty beyond 2030.

The de-facto shutdown of USAID, which led to an 83% drop in international aid from the United States, has made the financial support of private foundations, corporations and individual philanthropists even more important. Privately funded projects have always been part of the aid ecosystem. Private donors can move with agility and less bureaucracy. And many are already providing billions for initiatives ranging from healthcare to climate resilience, to journalism.

But private capital ushers in specific challenges, such as separating donor interests from the long-term interests of beneficiaries. And at this moment in time, most donors are already maxed out when it comes to the level of support that they are providing. So, are we seeing more players coming to the table? And what are the areas that are most likely to receive support in the current environment? We’ll be dissecting philanthropy’s role in defending democracy, so stay tuned for a powerful discussion.

We have a packed agenda.

Now, I want to take this moment to say thank you to all of our speakers, for their time and their expertise. I also want to say thank you to our event partners: Context, FT Strategies, Civicus and the Mozilla Foundation. Your support is so valued and appreciated. I’m excited that this year, our media partner is Devex, the platform for the global development community.

And of course, I want to thank our supporters. It’s because of their generosity that this event can be free to attend. And so, huge thanks to our Platinum Supporter, the People’s Postcode Lottery. We are incredibly grateful for your continued trust in us, and for your commitment to this initiative. I would also like to thank the Omidyar Network, and the Siegel Family Endowment. Your support is critical to the success of this event.

At the Thomson Reuters Foundation, we work every day to strengthen free, fair and informed societies, using our unique combination of legal and media expertise, alongside data intelligence. This conference is driven by a spirit of collaboration. We want to facilitate opportunities for our partners, friends and beneficiaries to come together and play a role in addressing some of the most critical issues of our time.

And so, thank you for being here and for playing your part. It’s never been more important.

More News

View All

Welcoming our new chairman – and honouring a remarkable legacy

Our CEO, Antonio Zappulla,…

Read More

How the MFC Secretariat supports the Media Freedom Coalition to protect independent media at home and abroad

The MFC Secretariat, hosted by the Foundation, was awarded the Cross of Merit from the…

Read More

Statement on the Closure of the Context News Brand

A statement on the Closure of the Context News Brand from…

Read MoreSupporting media and CSOs to curb illicit financial flows across sub-Saharan Africa

We…

Read More

Our impact in 2025: Building resilience in a turbulent year

Our CEO Antonio Zappulla reflects on 2025:…

Read More

Uncovering illicit financial flows: Training that transformed one journalist’s approach to reporting

Find out how training from the Thomson Reuters Foundation transformed Fidelis…

Read More

Legal needs are rising for NGOs amid attacks on civil society and funding cuts, our latest report finds

Our new report finds that legal needs amongst NGOs have risen significantly over the last…

Read More

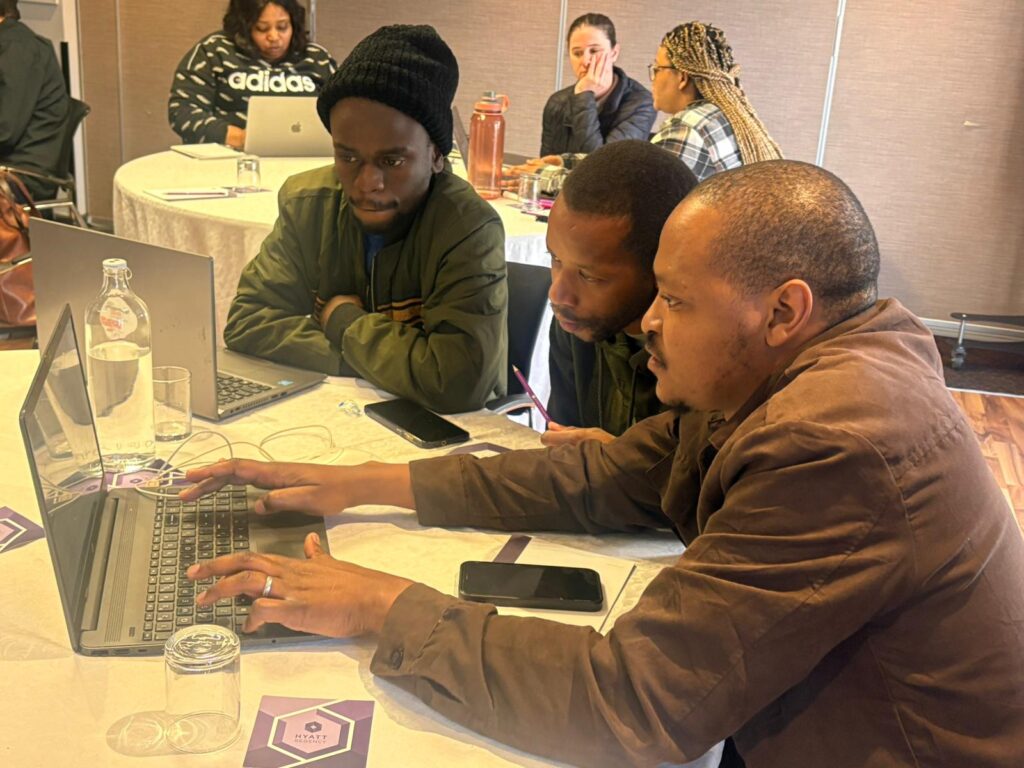

How South African newsrooms are benefiting from strategic and ethical AI adoption

We have…

Read More

World’s largest dataset shows transparency gaps in AI adoption

The Thomson Reuters Foundation…

Read More

The authoritarian playbook in action: Insights from Trust Conference 2025

Learn our Acting…

Read More